Dialing in Your Detection Coverage with MITRE ATT&CK

One of the recurring tasks owned by Gigamon Applied Threat Research (ATR) is managing the ThreatINSIGHT solution’s detection coverage – prioritizing the detections we create and validating their effectiveness. Originally, I would just pick whatever “felt right” or was the “threat of the day” to work on each week. As the team grew, we created better detection rules by measuring what we already cover and articulating the prioritized gaps we needed to fill. Now I sit down approximately 4 times a year (probably a coincidence), to think through what detection content should be on the team’s pipeline (to-do list) over the next 3 to 12 months. This blog post highlights my current methodology for planning detection coverage: prioritizing the topics we need to detect, evaluating our existing detection coverage, and communicating our coverage to teammates and management.

What Questions Need Answering?

What Are Your Priorities?

You may have heard the adage “when everything is a priority, nothing is.” We cannot defend ourselves equally against every potential threat; if we spread ourselves too thin by trying to boil the ocean, we leave ourselves undefended against the most consequential dangers.

When I think about prioritizing threats, I often focus on risk. Risk is usually defined as the equation Risk = Threat * Vulnerability * Cost. While being far from perfect or precise, this equation offers a way to quickly evaluate the risk associated with a vulnerability. To use the equation, first decide the significant vulnerabilities your company exposes. For each vulnerability, consider the likelihood a capable threat actor will target it, and the associated costs (time, money, reputation, and so on) to recover from an incident to determine the risk imposed by that vulnerability. The only math involved is straightforward multiplication. The equations with the highest results represent the prioritized vulnerabilities most in need of detections.

As an example, a hospital should understand the risk associated with the compromise of medical devices. How likely is it that threat actors have the malware and intent to target medical devices at the facility? What harm would compromised medical devices cause? How well do the medical devices protect themselves against intrusion? What would it cost (the liability for patients injured by compromised devices, liability of data leakage, loss of reputation, decreased future revenues, enhanced security measures to remove the intruders and return to full business operations) if malware was installed on the medical devices? If the hospital assesses all three variables in the risk equation to be significant, then the overall risk is high and it should prioritize medical device detections to mitigate the risk. Unfortunately, this is one of many risks it needs to prioritize against each other.

What Coverage Do You Already Have?

After I determine the priorities for what needs to be covered, I still need to understand the mitigations already in place before planning out the detection content the team will work on. Here’s where things start to get fun. Just as there are different ways to skin a cat (not that I have experience in taxidermy), there are also different ways to measure and communicate coverage for your detection content. Coverage is commonly measured against targeted, known threats (intelligence-based) and behaviors (such as catalogued in the MITRE ATT&CK framework).

Intelligence-Informed Coverage

The primary method I have seen others use to measure coverage is validating detections against known threats. This is commonly utilized by automated security testing vendors that, for example, launch a barrage of malicious software to see which detections are flagged. This can help show a security tool’s coverage against real-world, specific threats. We do something similar when we look at the top ransomware families and ensure we have rules to detect their specific activities or fingerprints. Intelligence-informed coverage often measures indicators of coverage (IOCs) — artifacts associated with identified malware and threat actor activities.

In this case, having coverage means being able to detect the specific threats that are relevant to their organization (based on their risk priorities). We all know that threats morph to avoid detection, and new threats constantly emerge. My measurement of coverage against such threats changes as frequently as the threats themselves change. Think about the regularity with which AV (antivirus) providers release signature updates. They are constantly trying to maintain a level of threat coverage; if they stop releasing updates, they will quickly lose their effectiveness.

An advantage of intelligence-informed coverage is that it’s easy to measure. I am aware of a certain number of IOCs and can calculate how many of them are flagged by our detections. While easy to compute, this metric does not necessarily convey the true coverage of one’s detections.

Behavior-Informed Coverage Using MITRE ATT&CK

Intelligence-informed detection is an important foundation of every detection system, but I can’t overlook our detection coverage of generic threats. In other words, detecting IOCs of known threats is a baseline; we also have to detect threats that do not yet have associated IOCs, such as modified malware and zero-days. As a detection engineer with a background in offensive security and threat hunting, I primarily examine behaviors to find opportunities for detection. My go-to reference in this arena is MITRE’s ATT&CK framework. If you need a primer on it, go no further than this ATT&CK 101 blog post by the creator of MITRE ATT&CK. The framework is documented, well-supported, and increasingly integrated into security tools such as Gigamon ThreatINSIGHT™.

When thinking with an ATT&CK mindset, coverage means having sufficient capability to detect malicious utilization of specified tactics, techniques, and procedures (TTPs) — independent of any IOCs used to identify a specific strand of malware or an attacker’s habits. Attackers know what IOCs have been created to identify their activities, and they can easily change their malware, command and control servers, filenames, and so on to avoid signature detection. Our coverage against adversaries’ behaviors is unlikely to change significantly in the short term; our detections continue to identify malware that was changed to avoid an IOC as long as it retains the same core behaviors. Behavior-based detections take longer to research and create, but they remain effective while attackers use the identified technique.

ATR creates both intelligence-based and behavior-based detections. We continually receive high-priority intelligence identifying a new threat and quickly write a corresponding detection. When I plan the Detection Research team’s content development efforts in advance, however, I prioritize behavior-based detections. I set aside enough time for writing behavior-based detections with the expectation that less long-term maintenance will be needed to keep them current. (The length of time needed to create behavior-based detections is another reason I now plan 3 to 12 months out.)

Visualizing Coverage of Behavioral Threats

As previously discussed, my preferred framework for discussing and cataloguing behavioral threats is MITRE ATT&CK. Several methods exist for measuring and visualizing detection coverage using ATT&CK. Pick the one(s) that work for you based on your goals and audience. I will discuss some common options seen in the industry, as well as my preferred option for our use case.

MITRE released the open source ATT&CK Navigator to allow users to visualize the ATT&CK matrix according to different use cases. The figures below use ATT&CK Navigator to show examples of each type of coverage discussed.

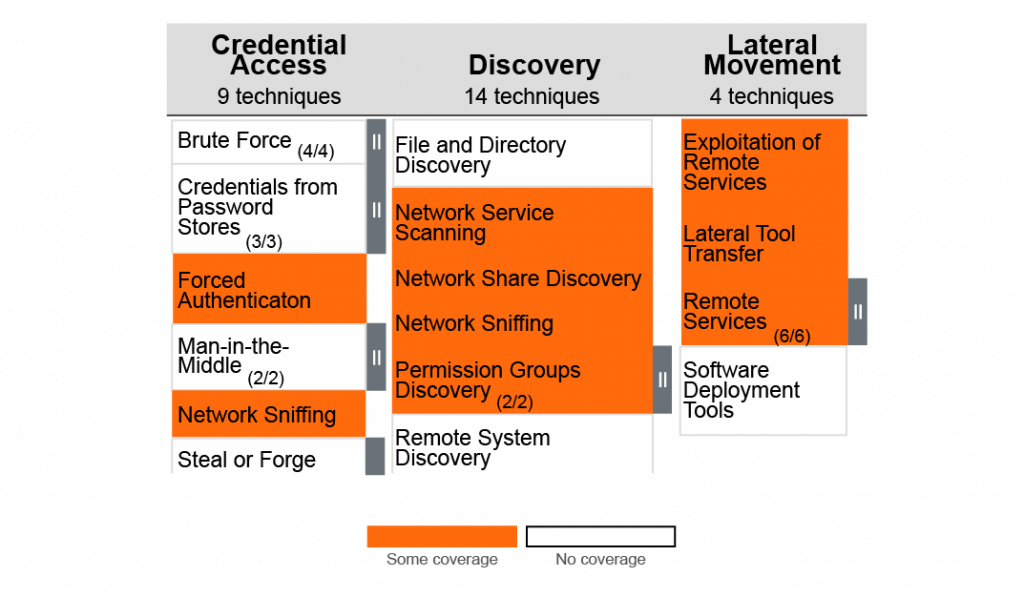

Binary

The most obvious method to measure coverage is a binary designation: You either have some level of coverage for a technique or you have none. This is best suited for marketing, where having the slightest inkling of a detection rule can be passed off as covering some malicious technique, regardless of the false positive (or false negative) rate.

In all seriousness, binary displays of coverage are intended for simple presentations where more delineation would be unhelpful or confusing. They can also help highlight areas of weakness. On the con side, they are rarely helpful for prioritizing content development, and they often communicate a misleading message.

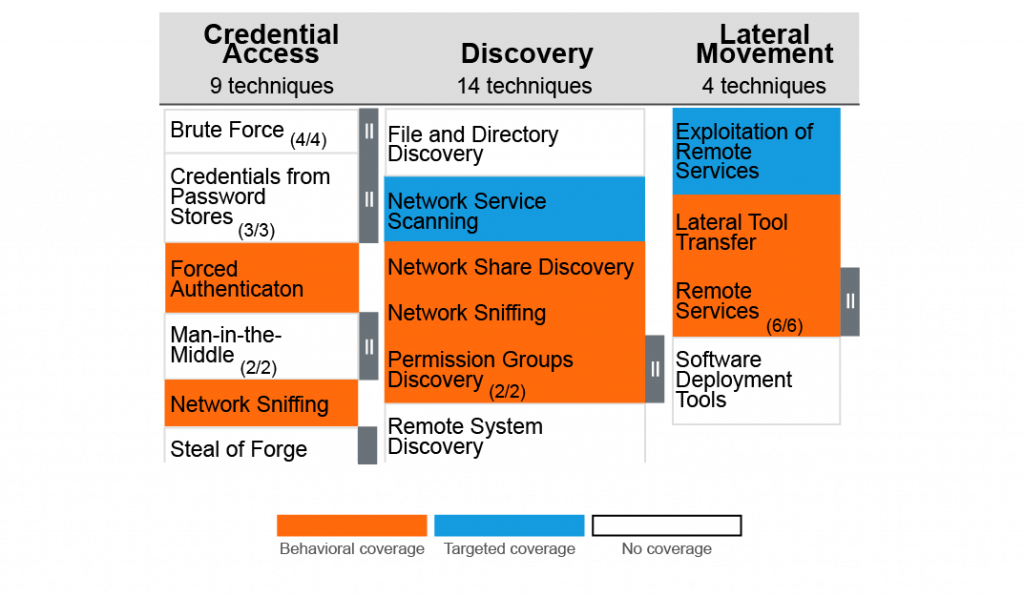

Differentiating Intelligence vs. Behavior Coverage

A second option for measuring detection coverage is a slight modification of the first. It adds some more context, while still allowing for a single, low-quality rule to “cover” an ATT&CK technique. Instead of merely saying we have coverage, we differentiate whether the underlying rules are based on behaviors or the intelligence of specific artifacts. We represent ATT&CK techniques covered by TTP-level rules with one color and targeted, tool-specific (IOC-based) rules with another. This gives just enough context to visually identify where coverage is more resilient — and perhaps more all-encompassing — versus those techniques where the only rules are superficially written to target specific tools or indicators. While I recommend that teams target detection content across the spectrum (see DETECTRUM by Steve Miller), it is still valuable to quickly see which ATT&CK techniques are missing coverage.

This representation is intended for enlightened practitioners and savvy CISOs. It is just enough detail to show the techniques where coverage may be more resilient to attacker innovation.

Custom Scoring

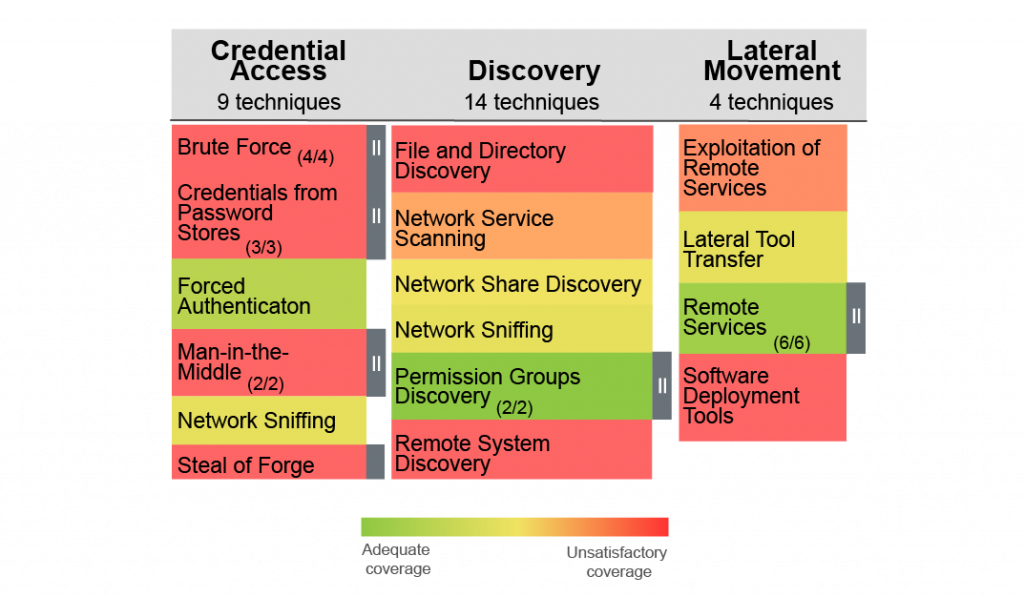

This is where we can really start to have some fun. When you maintain sufficient metadata on your detection logic, you can design algorithms to get a better idea of your realistic coverage for a given technique. Let’s say you want to score each MITRE ATT&CK technique that is usable for detection (see Red Canary’s common pitfall #2 for a more detailed explanation of determining which detections are usable). First, break out each rule by technique. Then look at other rule attributes: the confidence that the rule will detect the behavior, the confidence that a detected behavior is in fact malicious (this blog post explains the difference between these two confidence attributes), how specific the rule is (intelligence-based rules are specific, while tactic-based rules are more general), and so forth.

In addition to rule attributes, you should also look at technique attributes for a more thorough examination. For example, some techniques are simple and require few detection rules to adequately cover a technique. Other techniques are quite broad, and you could write rules for ages without fully covering said technique.

If I go back to my prioritization of threats based on risk, I can see further opportunities to tune my scoring. For example, perhaps I’ve decided that lateral movement within my network is a higher priority than certain discovery techniques, perhaps based on the cost associated. In that case, I could weight the lateral movement techniques differently, so that I need more coverage points to be fully covered. Figure 3 shows an example of custom scoring to visualize detection coverage.

After some initial tweaking, I find custom scoring to be the most helpful tool for planning the team’s detection content priorities. I can also use it with selected audiences outside the team — for example, when justifying the team’s priorities to management.

Conclusion

By overlaying my risk priorities on our current coverage, I determine which areas need investments and build out our detection research pipeline. My chosen visualization technique explains the detection coverage to security teams — and potentially to external audiences as well. I love the quote from Sun Tzu’s “The Art of War” where he states, “If you know the enemy and know yourself, you need not fear the result of a hundred battles.” By researching threats to understand your priorities, you can know the enemy; and by digging in on your existing detection rules you can know yourselves. Now go forth and win a hundred battles.

Featured Webinars

Hear from our experts on the latest trends and best practices to optimize your network visibility and analysis.

CONTINUE THE DISCUSSION

People are talking about this in the Gigamon Community’s Network Detection and Response group.

Share your thoughts today