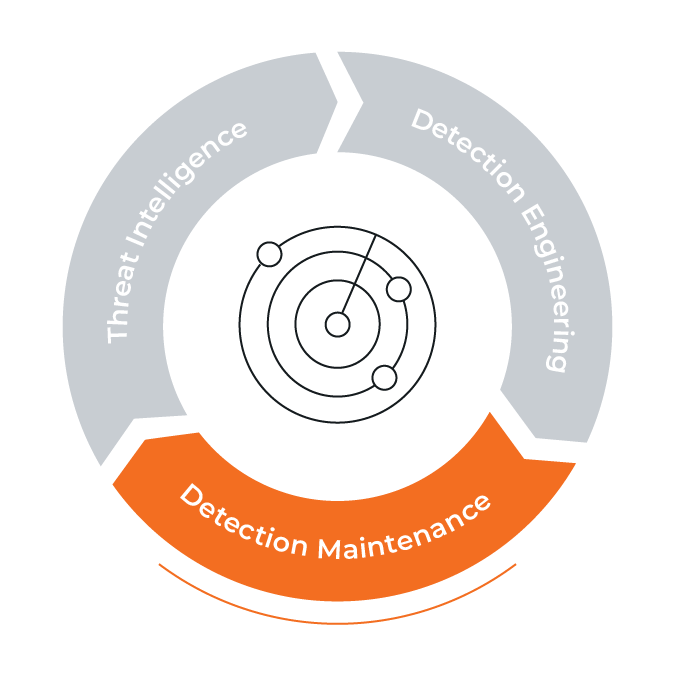

Quality Control: Keeping Your Detections Fresh

In “So, You Want to Be a Detection Engineer?” we examined the thought process behind creating detection rules. While I like to think every detection rule I write will last forever, in reality, detections need care and attention to ensure they stay valid and accurate over time.

Problem Statement

A detection’s effectiveness – the ability to identify malicious behavior while minimizing false positives – changes over time. Factors such as updates to existing software (both malicious and legitimate), the addition of new software, and changes to the network can all induce change. Quality Control (QC) is the process of tracking how rules perform over time and then revising or removing those that have become less effective.

Our original rules are based on the data available at the time we write them. We prioritize our limited time by detecting the malware that poses the greatest threat (which admittedly is a subjective assessment). Available data includes the danger posed by particular malware, threat activity seen recently on our networks, threats known to be active globally and against our specific industry, and the behaviors of legitimate software on our networks. This data changes over time and alters how accurately our detection rules identify badness. Just like we know that spending a lot of money on security hardware today does not magically protect you for the rest of eternity, the detections we write must continually adapt to the changing threat landscape.

Common Detection QC Motivations

Several issues reinforce the need to actively manage detection content instead of considering detection content development a one-time event.

- Changing Environment – The networks we defend are dynamic environments that change frequently. System administrators alter which protocols are allowed through firewalls and approve the use of new applications. For example, when a detection originally is written, SSH may not be allowed inbound from foreign countries; however, the opening of a new office in a foreign country may necessitate allowing SSH connections to/from this country. Detection rules need to adapt.

- Changing Threats – The threats of today will not sit still for long. According to AV-test.org, they see approximately 350k new samples per day. Obviously, each of those new samples is not completely new, but instead mostly the result of small modifications (new C2, AV bypasses, etc.) or automated polymorphism. Current rules may continue to detect these new variants, or our rules may need tweaks to detect the malicious activity. Fundamental changes to malware, however, prompt a more detailed review. A more concrete example involves the well-known offensive security toolkit called Cobalt Strike. Version 4.0 was recently released, putting into question any currently existing detections written to identify previous versions of Cobalt Strike activity. Detection engineers need a system to definitively evaluate how well current rules continue to catch threats.

- Changing Detection Technology – Iterative improvements are constantly being made to both open source and privately held technology, allowing for more sophisticated identification of malicious activity. Maybe it’s something simple like a newly available metadata field in a data feed or a new logging source. It could be more advanced like a new analytics module for your network sensor that makes inferences about encrypted traffic. Integrate tech improvements into your QC workflows to create a more effective detection method than the original detection rule.

Why This Matters

What do users want out of their security software? They want to be able to trust that a) the detection rules catch as much malicious behavior as possible (coverage), and b) the indicated events or alerts accurately reflect reality (confidence). When a product spits out a high level of false positive events, or fails to catch activities it previously identified successfully, confidence wanes.

The Solution

In order to identify the quality concerns associated with a changing landscape, we must actively identify detection content in need of maintenance. We keep detection quality at a high level with a series of QC checks that identify when rules begin to lose their effectiveness. Automated systems monitor rules on a daily basis and illuminate those in need of manual review. Algorithms assess key rule attributes (confidence, severity, category, etc.) and notify us of:

- Noisy rules: Rules that trigger a drastic increase in the number of alerts compared to previous detection rates

- Dormant rules: Rules that have not triggered malicious activity in a while

- Unacceptable level of false positives: When analysts classify too many instances of the same alert as false positive because they confirmed it was legitimate activity which caused the alert

Detection engineers respond daily to these automated notifications. They manually analyze the data and determine the root cause. Did Emotet shift operations to a new dropper? Is an overly broad detection alerting on a newly approved, legitimate application? Perhaps a security assessment just kicked off against the protected network. After understanding what changed, detection engineers edit existing rules and/or create new detections as appropriate to ensure coverage and accuracy.

How This Really Looks

To show the value, let’s look at a few examples where problems were identified and subsequently rectified as a result of our QC system.

Case Study #1: Dormant Rule

One of the situations that we look for when it comes to detection content is a dormant rule: when a detection rule has gone quiet after previously alerting on some activity. This happens for several reasons, but usually the answer is that the activity the rule looks for is just not occurring like it used to. This could be because the malware no longer operates on the network we’re monitoring; maybe the threat actor stopped operations on his/her own volition, or perhaps a specific mitigation introduced by a security patch or a reconfiguration of the environment now blocks the malware. However, it also could be because the activity or behavior which was previously being identified has changed slightly to avoid detection.

We are more than satisfied when a customer makes a network change to reduce the number of alerts seen associated with a specific activity. When the malware (rather than the customer) makes the change, we are less than satisfied. This was recently the case for us as we noticed a rule had gone dormant. This rule had historically been relatively active, and our QC system successfully alerted us to the issue. As activity with this rule had been common across many customers, we figured it unlikely that simultaneously all of our affected customers made changes to block this piece of malicious software. By digging in, we discovered a new variant of malicious software using a similar pattern. We added detection rules to identify this new activity as well. Our assumption is that the original authors pivoted to this new tool. We will maintain our coverage for the old tool as well, in case they or other threat actors ever resume operations with that malware.

As a result of our QC system’s continuous monitoring of our detection rules, we discovered new malicious software and quickly adapted our rules to detect it. Without continuous monitoring the new variant would have gone undetected.

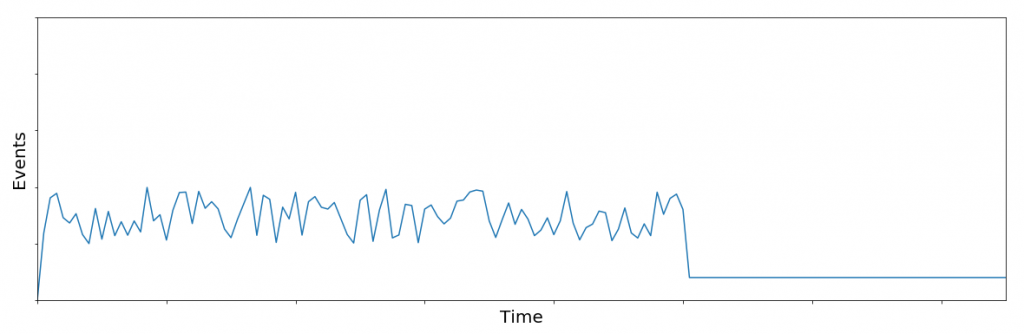

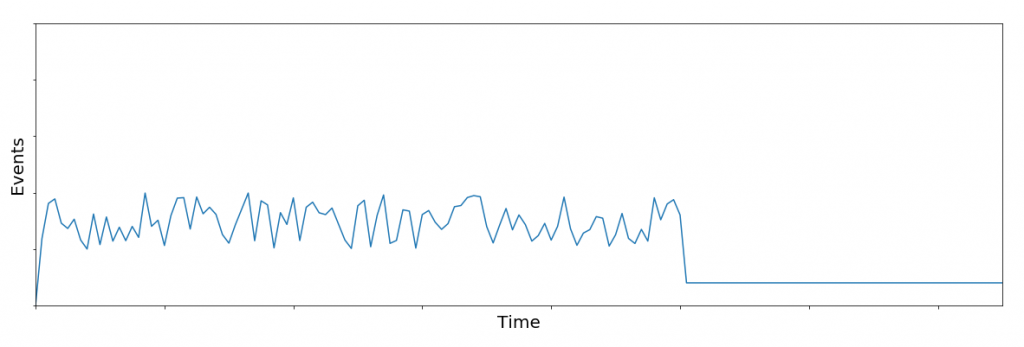

Case Study #2: Noisy Rule

Soon after starting this job, I woke up (note: I didn’t literally wake up to the sound of customer alerts) to hundreds of alerts originating from one detection rule. This one rule was intended to detect PowerShell being utilized to install malicious software on endpoints. As such, there was a lengthy whitelist in place to prevent legitimate executables from trusted sources from tripping the rule. Any time there is a barrage of alerts across a customer network the immediate suspicion is either a) a worm is moving laterally within a customer’s network, or b) the rule needs some attention. Thankfully, in this case we had simply missed a legitimate source of trusted binaries. The customer’s system administrator introduced automation that directed multiple endpoints to download legitimate software from this trusted source. Thanks to our QC system, we were able to quickly identify the cause of the spike and resolve the situation with more robust detection logic.

Conclusion

As you can see, maintaining high-quality detection content takes considerable effort. Automated QC processes combined with adequate detection engineer capacity to research root causes and update your ruleset will maintain the effectiveness of your detections when – not if – malware, legitimate software, and the network you monitor all change. A proper QC workflow will sustain all the hard work you put into writing the original detection rules and ensure analysts continue to trust your detection content.