Viewing Cybersecurity Incidents as ‘Normal Accidents’

Editor’s note: Too often your weakest security link is a human, who we like to call Dave. He’s doesn’t mean to be a threat, he’s just a person going about his day. For National Cybersecurity Awareness month get tips for promoting good security practices with your own employees like him.

This post originally appeared in Help Net Security.

As we continue on through National Cybersecurity Awareness Month (NCSAM), a time to focus on how cybersecurity is a shared responsibility that affects all Americans, one of the themes that I’ve been pondering is that of personal accountability.

Years ago, I read Charles Perrow’s book, Normal Accidents: Living with High-Risk Technologies, which analyzes the social side of technological risk. When the book was first written in 1984, Perrow analyzed complex systems like nuclear power, aviation and space technology — during a time when these technologies were still quaint compared to today’s standards. But even then Perrow argued that the engineering-driven approach to system safety was doomed to fail due to the complex systems he was looking at, or as he puts it, “normal accidents” were bound to happen.

Perrow challenged the traditional view of accidents (defined as “an unintended and untoward event”), assumed to be based on single causes, human error and/or lack of attention, to instead argue that these accidents were normal and inevitable because:

- The systems were complex

- The systems were tightly coupled

- The systems could pose catastrophic potential

In other words, big accidents have small beginnings. The same can be said in cybersecurity.

Cybersecurity Learnings from Airline Accidents

One of the top examples correlating Perrow’s theory with cybersecurity can be found during the crash of Air France Flight 4590, a Concorde SST, which took place in 2000. While the entire accident took approximately two minutes, the consequences were dire, with the loss of 113 lives.

In analyzing the accident, the airliner was one of the safest ever built during this time, managed by extremely skilled pilots and flight engineers. But it was a myriad of small failures that interacted to result in a crash. If one of the safest airline systems can suffer a “normal accident,” then they all can, due to interactive complexity and tight coupling.

From Flight Accidents to Cybersecurity Incidents

Within an organization, interactive complexity exists between groups of people; these teams are often loosely coupled as changes tend to flow through the business. However, within modern information technology environments, organizations now face interactive complexity and tight coupling, which can quickly aggregate to bring down other system functions, creating a formula for normal accidents. Simply, this guarantees that an organization will face information technology accidents — it’s not if, but when.

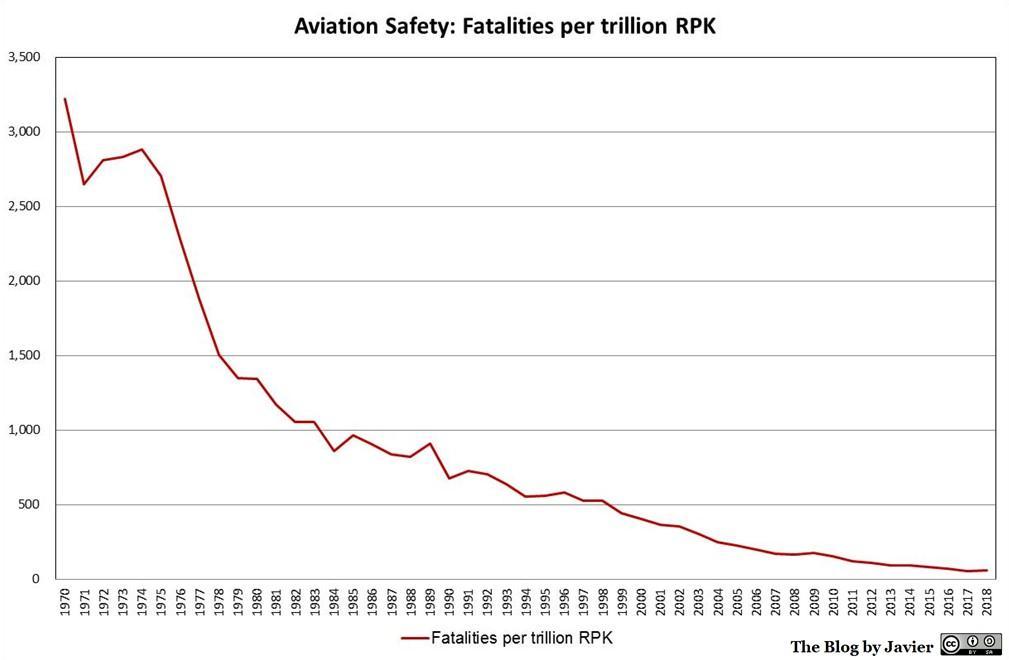

Despite the inevitability of a cybersecurity accident, this doesn’t mean it’s time to throw in the towel. Once again, we can learn from the aviation industry — here is a graph of fatalities per trillion revenue passenger kilometers from 1970 to 2018:1

Over time, there’s been a massive drop in the number of fatalities, or normal accidents, in aviation due to several factors, including:

- The creation of regulatory bodies and enforcement (FAA, ICAO)

- The adoption of rigorous accident investigation (NTSB, BEA)

- The open and public sharing of accident retrospectives

- Improved survivability during an accident (evacuation procedures, airport design, better materials)

- Improved training, certifications and crew resource management

Why Personal Accountability Isn’t Enough

This NCSAM, as we zero in on the goal of “personal accountability,” it’s become clear to me that this approach isn’t going to be effective. In information technology, systems are too complex and tightly coupled to assume that we can all be personally accountable in preventing the next normal accident. Similar to the aviation sector, what we need to be focusing on is as follows:

- We need strong, consistent and enforced regulatory frameworks

- We need frameworks that require performing post-incident analyses

- We need those analyses to be openly and publicly shared, for all to see

- We need to emphasize resilience during an incident, not prevention

- We need to build effective training that puts the toolkit of the responder in everyone’s hands

We need to build accountability and proactive steps into the system, not the people. The crew on Air

France Flight 4590 was highly accountable, well trained and took proactive

measures, but that wasn’t the problem that needed solving.

References

1. Mediavilla, Javier Irastorza. 2019. “Aviation safety evolution (2018 update).” The Blog by Javier. https://theblogbyjavier.com/2019/01/02/aviation-safety-evolution-2018-update/.

Featured Webinars

Hear from our experts on the latest trends and best practices to optimize your network visibility and analysis.

CONTINUE THE DISCUSSION

People are talking about this in the Gigamon Community’s Security group.

Share your thoughts today