To NFV, or Not to NFV? That Is the Question!

Editor’s note: Also see Part 2 of this series.

Many networking and IT professionals, all the way up to C-level, believe that virtualization of the network, the applications and services that run on the network, is the best thing since sliced bread, or at least since the invention of the transistor. The belief is that virtualization will solve all of the world’s communications problems.

While virtualization certainly has its merits and allows corporations to be more agile, flexible and less vendor dependent, there are still limitations to where and how virtualization should be applied so as not to negatively impact cost, space and energy. This is a real concern for network function virtualization — that is, NFV — being implemented in service provider networks now and in the future with a big drive towards 5G. (Note: The terms virtual, virtualized and virtualization also include containers or containerization.)

The idea of virtualization and its many benefits are based on the use of software to define all the functionality, whether it is for network routing/switching, services, storage or applications, while being agnostic to the hardware platform being run on. Trends like Moore’s Law were supposed to ensure that the processing speeds and busses would keep up with the expected growth in network data and traffic growth. Although general-purpose processing speeds have been continuing to increase, two important trends have occurred at the same time:

- Network data and traffic have increased at a faster rate due to changes in user behavior, applications and access technology

- Software overheads have increased due to developments in virtual platforms, creating additional abstraction to allow for greater agility and flexibility

As some early adopters of “going all virtual” have found, there are a number of performance limitations that can easily derail a digital transformation strategy. These are largely due to the limitations of general-purpose processors and processor busses when it comes to handling network traffic and data in motion.

Fortunately, there have also been some additional developments around ASIC processors for traffic switching, which can still enable the goals of being lower cost, less vendor dependent and more software, by utilizing open compute/networking and software-defined networks (SDN). This allows data center and network engineers to have more choices and be more selective in what they choose to virtualize.

Being more selective may even include retaining some existing monolithic or dedicated hardware appliances, which are probably still more than adequate for what they need to do, thereby saving the cost of replacing these.

NFV, Taking a Measured Approach

So, rather than simply going all out and virtualizing absolutely everything, a more measured approach — looking at each application or network function and its suitability to being virtualized — is more sensible and pragmatic.

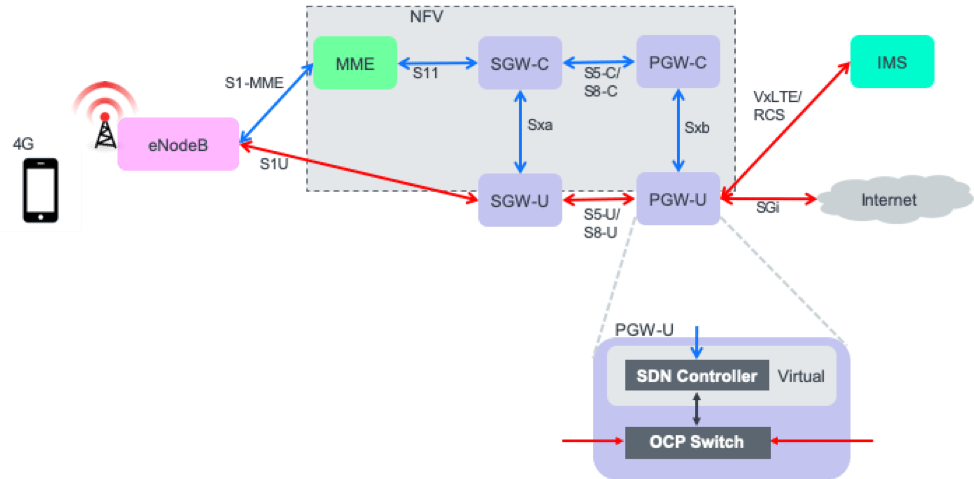

Consideration should be given to the traffic volume and latency sensitivity of each application or network function. This will most likely result in a hybrid network that could be a mix of fully virtual, software-defined and dedicated hardware, as shown in Figure 1.

- Control and signaling plane functions would typically be the first and most logical applications to virtualize, as long as there is a driver to do so.

- The data or user plane functions, typically of a

much higher traffic volume and often more latency sensitive:

- Smaller or lower volume sites may be candidates for virtualization.

- Larger or higher volume sites may more prudently reuse existing dedicated hardware boxes or adopt more of an SDN solution. In an SDN implementation, the controller portion is essentially software and so could be virtualized, and the portion of the data or user pane function that is switching or routing the actual traffic can be a commodity-based commercial off-the-shelf (COTS) switch box.

This gives a lot more power and flexibility in the hands of the data center and network operations to choose the type of solution for each function in their network that meets their needs, in terms of functionality, flexibility, scalability, space, capital cost and operational cost (which includes energy usage and heat dissipation). Thus, a bigger question than “To NFV, or not to NFV?” is to ask ourselves, “What to NFV, and what not to NFV?”

In part two of this series — “What to Tap Virtually, and What Not to Tap Virtually” — we will dive into specific functions where virtualization makes sense, and where it doesn’t.

Featured Webinars

Hear from our experts on the latest trends and best practices to optimize your network visibility and analysis.

CONTINUE THE DISCUSSION

People are talking about this in the Gigamon Community’s Service Provider group. Share your thoughts today.

Share your thoughts today