Is NetFlow Really the Silver Bullet for Service Providers, or Is There a Better Way?

NetFlow definitely has its place in your network monitoring toolbox, but we believe there is an even better way to leverage it. NetFlow helps service providers to reduce OpEx across key security and monitoring platforms, but the real value is achieved by going beyond NetFlow and producing rich IPFIX metadata records. In this scenario, the service provider not only stays compliant, but also achieves significant operational efficiencies.

Before we go on, it’s important to understand the difference between standard NetFlow and the creation of high-value, unsampled IPFIX metadata records.

What Is NetFlow?

NetFlow is a network protocol created by Cisco that collects flow data from IP network traffic as it flows in or out of an interface. The flow data can then be analyzed to create a picture of network traffic flow and volume.

Service providers use NetFlow to analyze network traffic to determine its point of origin, destination, volume and paths on the network. Before NetFlow, network engineers and administrators used Simple Network Management Protocol (SNMP) to monitor network traffic volumes on interfaces.

While SNMP was effective for network traffic volume monitoring and capacity planning, it didn’t provide detailed insight into the traffic, such as type, source or destination. The Internet Engineering Task Force (IETF) defined and standardized an extension to Cisco’s NetFlow v9, called Internet Protocol Flow Information eXport (IPFIX), and the protocol is now widely implemented by leading network equipment vendors. Cisco updated their NetFlow specification to version 10 to match IPFIX.

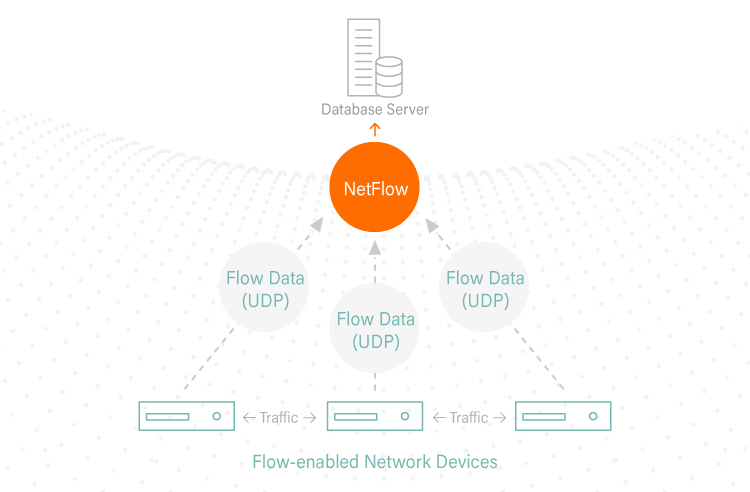

How Does NetFlow Work?

NetFlow follows a simple process of data collecting, sorting and analysis. The main components include:

IP Flow

An IP flow consists of a group of packets that contain the same IP packet attributes. As a packet is forwarded within a router or switch, it is examined for a set of attributes, including IP source address, IP destination address, source port, destination port, Layer 4 protocol type, class of service and router or switch interface.

NetFlow Cache

The NetFlow cache is a database of condensed information where data is stored once the packets have been examined.

NetFlow Collector

The next step is to export the data to a NetFlow collector, which is a reporting server that collects and processes traffic information so that it is easy to analyze.

How Does IPFIX Work?

IPFIX essentially follows the same process as NetFlow, except that it allows for much more, richer and customizable metadata to be extracted from network flows.

Why Use NetFlow or IPFIX?

NetFlow or IPFIX statistics are useful for several applications:

- Network Monitoring: Service providers can utilize flow-based analysis techniques to visualize traffic patterns throughout the entire network. With this overarching view of traffic flow, network operations (NetOps) and security operations (SecOps) teams can monitor when and how frequently users access an application in the network. Also, teams can use the data to monitor and profile a user’s utilization of network and application resources to detect any potential security or policy violations.

- Network Planning: Network operations teams can use flow data to track and anticipate network growth. For example, they can plan upgrades to increase the number of ports, routing devices or higher-bandwidth interfaces needed to meet growing demand.

- Security Analysis: With flow and application data, security teams can detect changes in network behavior to identify anomalies indicative of a security breach. The data is also a valuable forensic tool to understand and replay the history of security incidents so security teams can learn from them.

- Regulatory Requirements. Flow and application data records can be generated and forwarded to appropriate tools to check for compliance with regulations.

Challenges of Generating Flow Data

NetFlow generation has a performance impact on the devices where it is turned on. To reduce that impact on performance, networking devices often rely on packet sampling to generate NetFlow statistics. Low sampling rates — sometimes as few as 1 in 1,000 packets — dramatically reduce network traffic visibility and could prevent teams from uncovering critical security threats or performance issues.

Generating IPFIX for more than just standard NetFlow Layers 2 to 4 data would have an even greater impact on network device performance. Sampling at even slower rates would be meaningless.

Unfiltered, Unsampled Metadata Is the Solution

Reduced or No Sampling

As mentioned above, NetFlow generation is typically undertaken by the routers and switches as part of the production network. However, NetFlow does have a performance impact on the devices where it is enabled. Keeping up with growing data volume and network speeds is a growing concern for most enterprises and service providers that are straining to have enough compute resources to match the growing demand.

Gigamon eliminates the risks and lack of visibility associated with packet sampling by running NetFlow extraction in parallel with, but out of band to, the actual network traffic. With this capability, Gigamon users can generate NetFlow statistics at a much higher sampling rates, even at line rate. If the NetFlow collectors cannot keep up, then sampling can be enabled.

Rich Metadata

While NetFlow provides Layer 4 flow data, organizations also need access to Layer 7 or application-level metadata.

How does Gigamon address this challenge? Through IPFIX-based metadata. The Application Metadata Intelligence capability, which includes NetFlow, generates Layers 2 through 7 metadata that is both unsampled and gathered without impacting performance.

The Proof Is in the Pudding

Gigamon is currently collating traffic from multiple 100G links and producing multiple IPFIX fields without any degradation of subscriber experience for service providers today.

When we first implemented this solution here at Gigamon for our own IT network, we saw a reduction in false positives, faster time to threat detection and were able to leverage our security team much more effectively.

By integrating Application Metadata Intelligence with our already installed Security Information and Event Management (SIEM) solution, we were able to identify unusual patterns in hypertext transfer protocol (HTTP) response codes, specific domains indicating a possible security breach and users attempting to reach sites signed by WoSign secure sockets layer (SSL) certs.

NetFlow and IPFIX have been, and will continue to be, powerful tools for gaining network visibility. By offloading NetFlow/IPFIX generation to Application Metadata Intelligence from Gigamon, service provider SecOps and NetOps teams are able to keep pace with growing data volume and speed without sacrificing the important insights that can be gained from network monitoring and security analysis.

Download “Harnessing the Power of Metadata for Security” to learn how Metadata Generation gives you security x-ray vision that separates signals from noise, reduces time-to-threat-detection and improves overall security efficacy.

Featured Webinars

Hear from our experts on the latest trends and best practices to optimize your network visibility and analysis.

CONTINUE THE DISCUSSION

People are talking about this in the Gigamon Community’s Service Provider group. Share your thoughts today.

Share your thoughts today