The Visibility Paradox in Critical Infrastructure Monitoring

This paper is an accompaniment to a presentation given April 22, 2022, at S4x22.

Network defenders and critical infrastructure operators recently learned of two new concerning threats in industrial environments:

- Industroyer2, a potentially updated version of malware first identified in 2016, targeting electric infrastructure in Ukraine

- PIPEDREAM or INCONTROLLER, a framework for manipulating industrial equipment with potentially dire consequences

These events from spring 2022 come after more than a decade of increasing interest in compromising critical infrastructure networks. Yet while the threat landscape appears to continuously evolve, defensive measures in industrial and critical networks have remained largely unchanged and limited.

One of the principal issues impacting critical infrastructure visibility and defense is the nature of many systems as embedded or bespoke devices. Purpose-built, narrowly focused, and often ruggedized for extreme environments, such devices either cannot support or do not allow for significant additions of security tooling and monitoring. Unlike the evolution of endpoint detection and response (EDR) products in the enterprise IT space over the past several years, industrial networks remain an area of limited host visibility and almost completely lack robust host-based response mechanisms.

To counter this, a variety of solutions exist using network monitoring and traffic analysis to compensate for lack of visibility on the endpoint. Through network traffic capture and security monitoring, visibility gaps can be reduced, and asset owners gain a level of insight into their networks. This strategy is supported by the nature of most communication within control system environments: unencrypted and “in the clear.” While encryption possibilities exist for certain protocols (such as Modbus or OPC with TLS), these are often “add-ons” or ways of tunneling otherwise insecure traffic over another communication pathway. As a result, such mechanisms can increase processing overhead and communication latency — a trivial issue in most IT environments, but a potential concern in operational technology (OT) networks with strict timing requirements and a lack of excess processing power.

As a result, an entire industry of network traffic analysis and monitoring emerged over the past several years to take advantage of the transparent nature of (most) industrial and critical infrastructure communication to provide a layer of security and visibility. Yet at the same time, such visibility comes at a clear cost: critical control system and related traffic remains susceptible to interception by potential adversaries who may enter the environment or even potentially capture and manipulate such traffic for malicious purposes. Asset owners seemingly face a double-edged sword: achieve some degree of process and network visibility given lack of other suitable options, but with the cost of potentially enabling adversaries to spy on or even impact the very networks we attempt to secure.

While this risk — of adversary traffic interception and manipulation — is possible, we must ask ourselves if this is plausible or reflected in actual events. Looking at critical infrastructure-related activity over the past 15 years, the most significant integrity-impacting events, as well as long-running espionage and access campaigns, all share some critical commonalities:

- Frequent use of built-in tools, commands, and other items (such as LOLBins) for access and lateral movement activity, observed in the 2016 power event in Ukraine

- Compromise of systems capable of communicating with field devices or other control systems to deliver impacts, observed in the 2017 Triton incident involving compromise of a safety workstation

- Execution of attacks via abuse of legitimate protocols from a compromised endpoint, seen in both the 2015 and 2016 Ukraine power events

Thus, while traffic manipulation attacks are possible, they are not reflected in any publicly known industrial or critical infrastructure-impacting incident as of this writing. Instead, such attack types are limited to demonstrations, proof-of-concept activities, and lab environments — making them items of note but observed in adversary behaviors.

The situation thus presents a dilemma to security practitioners. While traffic manipulation attacks and similar are possible, they are not yet reflected in actual adversary operations. At the same time, most opportunities for security monitoring and visibility are enabled by the very vulnerable traffic streams that pose a potential (if not yet realized) threat. Defenders face a trade-off: maximize security posture through hardened, encrypted communication channels or enable visibility with potential vulnerability by maintaining (and monitoring) clear communications.

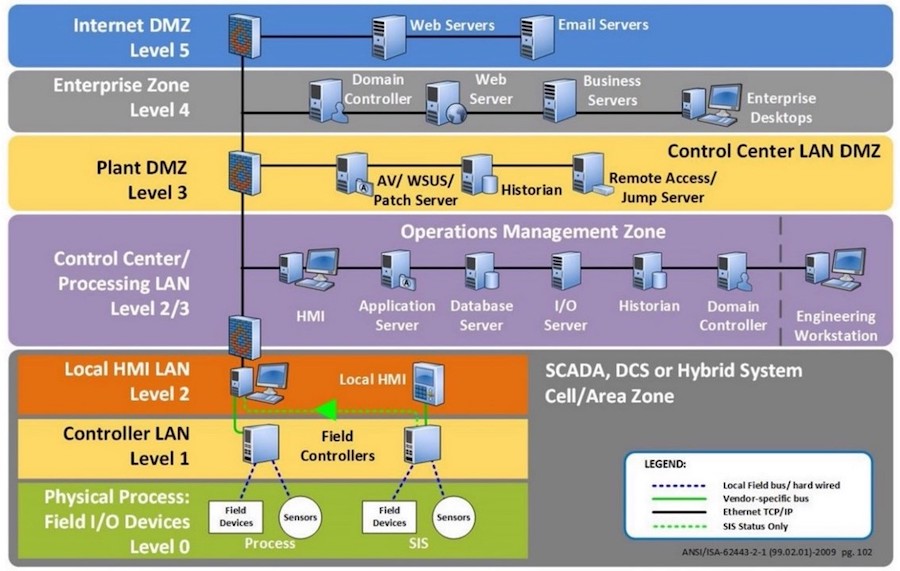

Yet asset owners and defenders are not necessarily presented with a strictly binary decision. Instead, different defensive and monitoring postures may be more relevant and appropriate depending on where in the environment communication takes place. We can break down desired security posture based on specific network enclaves or operating environments, as mapped to a framework such as the Purdue Enterprise Reference Architecture (Figure 1).

Using the above as a guide, we can make the following assessments:

- Level 5 (Internet DMZ, interfacing with external networks): Traffic should be encrypted whenever and wherever possible. When transitioning infrastructure the enterprise does not manage or own, anything leaving the network should be secured to at minimum ensure confidentiality while also protecting against replay attacks or similar actions.

- Level 4 (Enterprise Zone) to Control Center/Processing LAN (Level 2/3): Encrypted traffic can still be beneficial, especially if eventually leaving the network, but implementing mechanisms for SSL/TLS inspection or proxying can dramatically improve visibility into activity of interest while still retaining many benefits of encrypted communications, especially for items destined for external sources.

- Level 2 (Local HMI LAN) and lower: Given the security issues identified previously, visibility and monitoring may only be possible with unencrypted control systems and related traffic. However, implementing verification and integrity checks where available may significantly reduce attack surface for potential manipulation or replay attacks. Combining these approaches — visibility and monitoring with traffic verification — represents the likely best combination of actions to enable security operations without also introducing unnecessary vulnerability.

The above represents a suitable plan for many organizations to blend traffic security with visibility to achieve the best possible mix for overall defense. Using this differentiated, layered approach, exposed traffic is secured, internal traffic and systems are monitored, and critical infrastructure and OT communications remain available for analysis to identify potential attacks.

However, we must recognize that environments — even OT-related networks — are changing. Increasing use of distributed communications (for example, having a single control center manage multiple field sites with traffic flowing over non-private infrastructure) and incorporation of cloud computing into critical infrastructure operations mean the reference model above will likely be out of date soon, if not reaching this status already. These developments upend enclave-specific security postures and undermine segmentation efforts, making for a much more complex environment.

Under these circumstances, asset owners and network defenders will need to embrace greater communication security as critical traffic increasingly flows over links not controlled or limited to the specific entity. In order to address latent issues in security monitoring and visibility, a combination of SSL/TLS inspection or proxying, where possible, and increased capabilities in endpoint monitoring will be necessary to adapt to such changes. While it might be easy to just shout terms such as Zero Trust as a perceived panacea to these issues, network owners and defenders must realize that any realistic approach to securing increasingly hybrid environments will require a layered, detailed approach extending across network, host, and policy decisions.

At present, a robust approach to network enclave understanding and adopting appropriate security and visibility models per enclave and function remains a viable, if not necessary, approach for most organizations. Through a focus on threats as they exist right now, asset owners and defenders can focus on immediate needs and address current security problems. In doing so, we must not forget or ignore future considerations, as noted previously, but abandoning current security concerns for notional or possible events is likely an ill-advised choice for the majority of organizations. In short, organizations must prepare for and address the threats of today, while simultaneously recognizing and planning for the security concerns of tomorrow.

Threat Research to Accelerate Detection

The Gigamon Applied Threat Research (ATR) team’s mission is to dismantle the ability of an adversary to impact our customers. Our team of expert security researchers, engineers, and analysts focuses on continuous research of threat actors and emerging attack techniques and builds leading-edge detection and investigation capabilities leveraging the vast Gigamon ThreatINSIGHT network of telemetry and intelligence datasets.

CONTINUE THE DISCUSSION

People are talking about this in the Gigamon Community’s Security group.

Share your thoughts today