The Alarming Rise of AI-Powered Cyber Attacks: Are You Seeing It?

Unlike any other technology, AI has introduced a new era of sophistication and complexity in the threat landscape. According to the Gigamon Hybrid Cloud Security Survey of more than 1,000 Security and IT leaders worldwide, 59 percent report an increase in AI-powered attacks, including smishing, phishing, and ransomware.

These advanced threats leverage artificial intelligence and machine learning (ML) to execute multi-stage attacks, utilizing vectors such as impersonation and social engineering, AI-driven malware, and network exploits to achieve their objectives. The process typically begins with meticulous data collection, followed by pattern analysis and attack planning, allowing threat actors to craft highly targeted and convincing campaigns.

As these attacks adapt and evolve, they pose significant challenges for traditional security measures. This blog explores the intricacies of AI-powered attacks, with a particular focus on data exfiltration scenarios, and provides a deep dive into best practices for defending against these advanced threats, empowering organizations to bolster their defenses and protect sensitive information.

Understanding AI-Powered Cyber Attacks

AI-powered attacks typically use unsupervised ML algorithms to analyze vast amounts of data, identify patterns, and adapt to changing security measures.

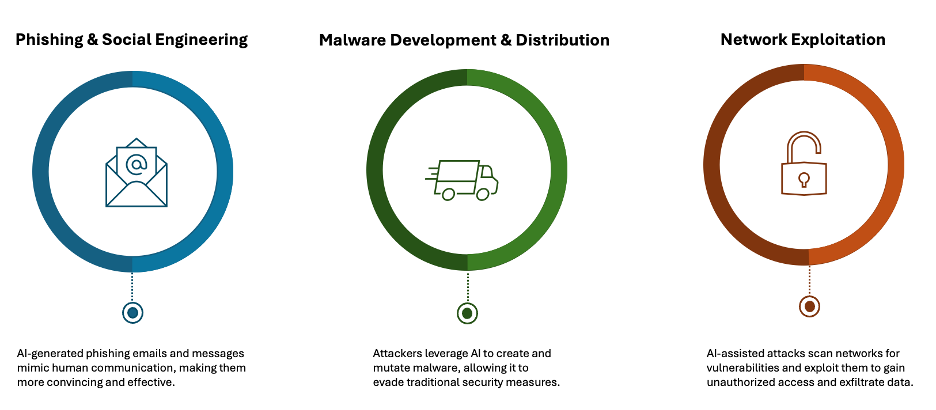

These attacks can be categorized into three categories:

- Phishing and social engineering: AI-generated phishing emails and messages can mimic human communication, making them more convincing and effective. A finance professional in Hong Kong was tricked into transferring over $25 million after participating in a video call with deepfakes of the company’s CFO and another colleague (Arup data breach).

- Malware development: AI can create and mutate malware, allowing it to evade traditional security measures. One of the most significant examples of AI-generated malware is polymorphic malware like LummaC2 Stealer. This type of malware can change its code structure every time it infects a new system, effectively evading traditional signature-based detection methods used by endpoint protection tools.

- Network exploitation: AI-assisted attacks can scan networks for vulnerabilities and exploit them to gain unauthorized access and exfiltrate data. An AI-enabled botnet was used in a distributed denial-of-service (DDoS) attack that compromised millions of user records (TaskRabbit data breach).

These attacks pose significant threats due to their increased sophistication, speed, and evasive capabilities, making detection challenging. In addition, attackers leveraging ML and AI found more effective ways to spread malware rapidly across lateral movement, bypassing traditional security measures.

How AI-Powered Cyber Attacks Work

AI-powered attacks involve sophisticated, multi-stage processes that could leverage deep ML to breach and exploit targeted systems. These attacks typically follow these steps:

- Data collection: AI systems collect vast amounts of data from various sources, including social media, public records, and even from dark websites.

- Pattern analysis: AI algorithms analyze the collected data to identify patterns and vulnerabilities.

- Attack planning: AI systems help the perpetrator to plan and execute attacks based on the identified patterns and vulnerabilities.

- Adaptation and evolution: AI-powered attacks can adapt to changing security measures, making them highly effective for long periods.

It is no surprise that AI-powered cyberattacks are a growing concern, particularly when it comes to data exfiltration and intellectual property (IP) leakage.

Data Exfiltration — a Top Attack with Potentially Catastrophic Consequences

This new class of cyber attacks has become increasingly sophisticated, leveraging AI to automate and enhance reconnaissance by gathering target information from public sources and predicting optimal attack times and methods.

Recently a major healthcare company fell victim to a sophisticated phishing attack. Here’s how it happened:

A threat actor used AI to scrub social media and public profiles of key employees, gathering enough information to craft a highly personalized email that mimicked a communication from a trusted colleague. The email requested sensitive patient data, and because it was so well-crafted, it slipped past traditional security filters.

Once inside the network, exploitation tools developed with the help of AI helped the attacker move laterally and undetected, mimicking normal user behavior to evade anomaly detection systems. With that, the attacker identified valuable data and exfiltrated it without raising any alarms for a long period.

As we can see, intellectual property leakage leveraging AI and ML poses significant threats through various means, including automated data theft, where exfiltration techniques can rapidly steal sensitive data, including IP, on a large scale. Furthermore, attackers can reverse-engineer AI applications to steal underlying models considered IP and manipulate training data to lead to biased or incorrect outputs, compromising AI-generated content.

These threats require robust security measures to protect sensitive data, such as AI-driven defenses and comprehensive visibility into all data in motion, including AI-generated. That’s why in the Hybrid Cloud Security Survey, security and IT leaders cited real-time threat monitoring and visibility across all data in motion as their top priority this year to optimize defense-in-depth strategy.

One more rising concern would be attacks from insider threats where the results could be amplified by enabling malicious and unintentional actors to automate data theft and exfiltrate sensitive information without detection. One notorious case from 2023 was the data leakage in a global mobile phone manufacturer, where employees leaked confidential information by using a generative AI engine to review internal code and sensitive documents. This incident just highlighted the risk of confidential data being exposed through interactions with AI engines.

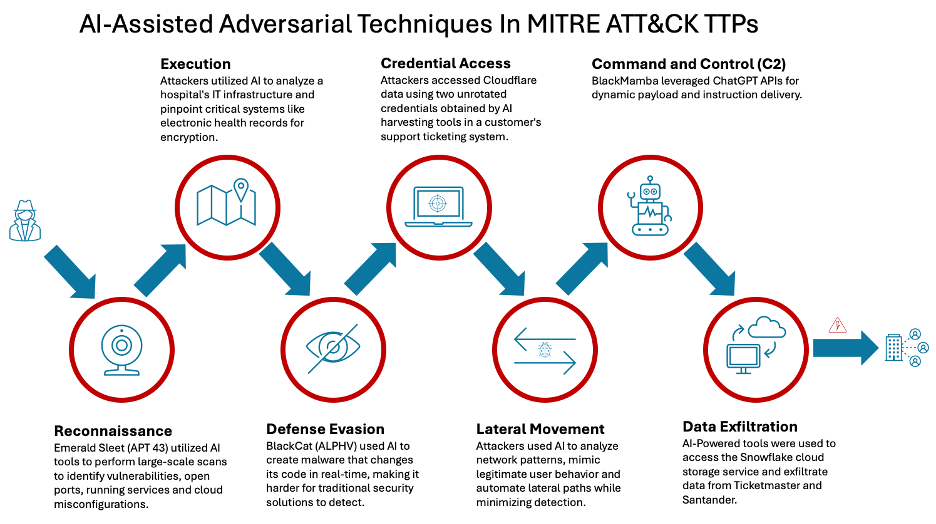

As a next step, let’s use MITRE ATT&CK Tactics, Techniques, and Procedures (TTPs) to expose the stages where these adversarial techniques assisted by AI have been leveraged by threat actors:

Defending Against AI-Powered Data Exfiltration Attacks

A layered defense strategy creates an adaptive, AI-resistant security architecture that significantly enhances protection against AI-assisted data exfiltration. By exposing stealthy techniques like protocol hopping, cloud abuse, and low-volume data leaks, network visibility is essential to enable early detection across network, endpoint, and cloud layers.

This intelligence accelerates automated response (such as SOAR-driven containment) and forces attackers into a scalability trap — where evasion efforts directly reduce exfiltration efficiency. The synergy between visibility and layered controls reduces the number of data breaches while meeting compliance demands through provable data protection, as shown in the next table:

Critical Layered Defense Architecture

Component | Goal (sample) |

Encrypted traffic analysis | Uncover AI-obfuscated payloads via JA3/JA3S fingerprints |

NDR solutions | Cross-correlate endpoint/network/cloud telemetry |

DLP with AI-adaptation | Detect data morphing (e.g., PDFs converted to images for evasion) |

Microsegmentation | Contain lateral movement to limit data access |

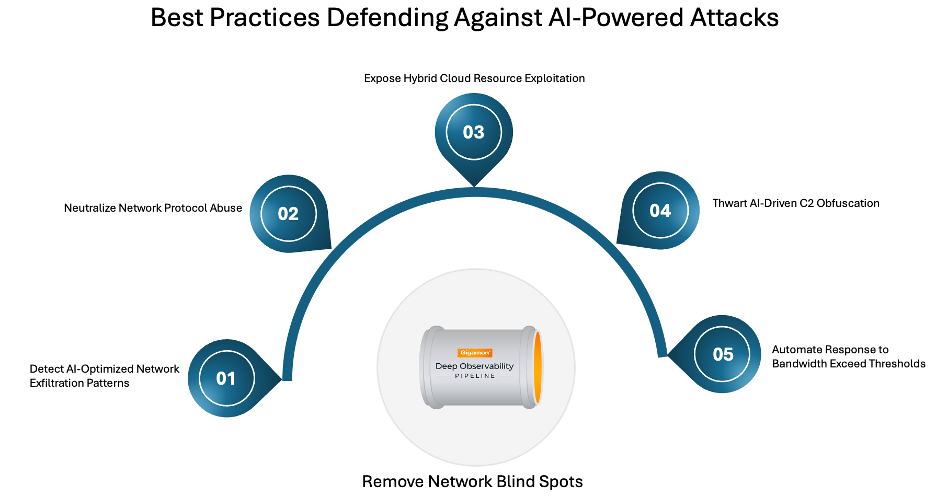

Here are five best practices where comprehensive network visibility from Gigamon Deep Observability Pipeline counters AI-assisted data exfiltration attacks, mapped to MITRE techniques like T1020 (Automated Exfiltration) and T1041 (Exfiltration Over C2 Channel).

1. Detect AI-optimized exfiltration patterns

MITRE techniques: T1020, T1048 (Exfiltration Over Alternative Protocol)

Threat: AI tools dynamically fragment data, mimic legitimate traffic (such as cloud backups), and rotate protocols to evade thresholds

Comprehensive network visibility is essential for:

- Deploying ML-based traffic baselining to spot entropy anomalies in compressed/encrypted data

- Flagging abnormal outbound volumes (like a 500 percent spike in DNS queries from a single host)

- Correlating timing patterns with AI automation signatures (such as burst transfers during off-peak hours)

2. Neutralize protocol abuse

MITRE techniques: T1041, T1572 (Protocol Tunneling)

Threat: AI leverages non-standard protocols (DNS, ICMP, HTTP/2) for stealthy data leaks

Comprehensive network visibility is essential for:

- Decode protocol metadata for tunneling artifacts (such as DNS TXT records with Base64 payloads)

- Use behavioral analysis to identify “low-and-slow” exfiltration (like 1KB/s via HTTPS)

- Block domains/IPs with high failed-tunnel attempt rates

3. Expose hybrid cloud resources exploitation (misconfigured storage buckets, insecure APIs, opened ports)

MITRE techniques: T1530 (Data from Cloud Storage), T1213 (Data from Information Repositories)

Threat: AI scans for misconfigured S3/GCP buckets and automates bulk data theft

Defense enhancement from network visibility:

- Monitor cloud API traffic for anomalous GetObject/ListBucket sequences

- Map data access patterns to user roles (like developer account downloading HR files)

- Integrate with CSPM tools to auto-remediate public bucket exposures

4. Thwart AI-driven C2 obfuscation

MITRE techniques: T1090 (Proxy), T1105 (Ingress Tool Transfer)

Threat: AI rotates C2 infrastructure using fast-flux DNS or cloud services (such as Slack API for data relay)

Defense enhancement from network visibility:

- Analyze TLS handshakes for impersonated certificate patterns

- Detect beaconing intervals adapted to network noise (like randomized 5–15 minute callbacks)

- Trace IP reputation changes mid-session (such as AWS IP reused by threat actors)

5. Automate response to bandwidth exceed thresholds with integrations

MITRE Techniques: T1052 (Exfiltration Over Physical Medium), TA0010 (Exfiltration)

Threat: AI switches exfiltration vectors in real-time (such as USB→cloud if network blocked)

Defense enhancement from network visibility:

- Trigger bandwidth throttling when data staging exceeds thresholds

- Isolate endpoints exhibiting multi-vector exfiltration attempts

- Enrich alerts with threat intel on AI toolkits (like WormGPT-generated exfiltration scripts)

Conclusion

The surge in attacks assisted by AI and ML is growing and requires immediate attention and action. Eliminating visibility gaps by removing blind spots in the network forces AI attackers into a scalability trap — increased stealth reduces exfiltration speed, buying defenders critical response time to prevent financial losses, reputation damage, compliance failures, just to name a few.

Finally, to stay ahead, organizations must prioritize complete visibility into all data in motion and leverage AI-driven defenses, complemented by a culture of cybersecurity awareness.

Ready to learn more about how Gigamon enhances your defenses against AI-powered attacks?

CONTINUE THE DISCUSSION

People are talking about this in the Gigamon Community’s AI Exchange group.

Share your thoughts today